Understanding the Basics: Data Mesh, Data Fabric, and Data Lineage Explained

An agile, data-driven enterprise rests on cohesive data architecture. Data Mesh and Data Fabric are two architectural approaches that have risen in popularity for managing data in complex and distributed environments, but they have distinct characteristics and purposes and both can benefit from integrating data lineage. In this blog post, we’ll break down Data Mesh vs. Data Fabric and how these relate to lineage.

Understanding Data Mesh

Gartner defines Data Mesh as “a cultural and organizational shift for data management focusing on federation technology that emphasizes the authority of localized data management. Data Mesh is intended to enable easily accessible data by the business. Data assets are analyzed for usage patterns by subject matter experts, who determine data affinity, and then the data assets are organized as data domains. Domains are contextualized with business context descriptors. Subject matter experts use the patterns and domains to define and create data products. Data products are registered and made available for reuse relative to business needs.”

In other words, Data Mesh is a decentralized and domain-oriented approach to data architecture, primarily focused on data productization and autonomy.

Benefits of Data Mesh

According to Manta partner IBM, Data Mesh provides “a decentralized data architecture that organizes data by a specific business domain—for example, marketing, sales, customer service, and more—providing more ownership to the producers of a given dataset.”

Data Mesh is an emerging architectural paradigm that aims to address the challenges associated with data management and analytics in large-scale, complex organizations. Here are some of the benefits of Data Mesh:

- Domain-driven data ownership: In a Data Mesh, data ownership and responsibility are shifted from a centralized data team to individual domain teams. Each team is responsible for its own data products, including data collection, transformation, storage, and consumption. This domain-driven approach encourages a sense of ownership and accountability, leading to faster and more accurate data insights.

- Scalability and autonomy: By decentralizing data responsibilities, Data Mesh allows organizations to scale efficiently. As the organization grows and new domains emerge, each domain team can independently manage its data infrastructure and analytics. This autonomy reduces bottlenecks and minimizes the need for coordination across different teams.

- Data democratization: Data Mesh promotes data democratization by empowering domain experts to make data-driven decisions without relying on a centralized data team. Domain teams have better visibility and control over their data, leading to quicker and more informed decision-making.

- Reduced data silos: Traditional data architectures often result in data silos, where different teams or departments store and manage their data separately, leading to duplication and inefficiency. Data Mesh encourages data sharing and collaboration by establishing data products as shareable assets across the organization.

- Faster time-to-insights: With domain teams taking charge of data, the turnaround time for data insights is reduced. Teams can focus on their specific needs, leading to quicker data processing, analysis, and decision-making.

- Data quality and governance: Data Mesh emphasizes the importance of data quality and governance at the source because it is decentralized, meaning there is no single overarching body.

It's worth noting that implementing Data Mesh is not without its challenges, such as cultural shifts, coordination overhead, and potential duplication of efforts. However, the benefits of increased agility, scalability, and democratization of data make Data Mesh an attractive option for organizations seeking to harness the full potential of their data assets.

Real-World Examples of Data Mesh

What does that look like in a practical sense? Let's explore a practical example of Data Mesh for a financial services company.

In this example, we'll consider a financial services company that offers various products and services, including banking, lending, investment management, and insurance. The company faces data management challenges due to the growing volume of data, complex data processing needs, and the requirement to meet regulatory compliance.

![]() The financial services company first identifies key domains based on its core business functions. These domains could include "Banking Operations", "Loan Management," "Investment Portfolio", "Customer Relations", and "Risk Management." Then, each domain is assigned a dedicated data team responsible for managing all data-related tasks within that domain. For instance, the "Banking Operations" domain team handles data related to customer transactions, account balances, and branch operations, while the "Loan Management" domain team deals with loan applications, approvals, and repayment data.

The financial services company first identifies key domains based on its core business functions. These domains could include "Banking Operations", "Loan Management," "Investment Portfolio", "Customer Relations", and "Risk Management." Then, each domain is assigned a dedicated data team responsible for managing all data-related tasks within that domain. For instance, the "Banking Operations" domain team handles data related to customer transactions, account balances, and branch operations, while the "Loan Management" domain team deals with loan applications, approvals, and repayment data.

![]() Domain teams treat data as valuable products that are offered to other teams in the company. For example, the "Risk Management" team develops data products like "Fraud Detection API", "Credit Scoring Model", and "Market Risk Analytics Dashboard."

Domain teams treat data as valuable products that are offered to other teams in the company. For example, the "Risk Management" team develops data products like "Fraud Detection API", "Credit Scoring Model", and "Market Risk Analytics Dashboard."

The domain teams define data contracts that specify the data schemas, quality standards, and usage guidelines for their data products. For example, the "Investment Portfolio" team ensures that their data product includes attributes like asset types, market values, and historical returns, and they provide this data through standardized APIs. Each domain team builds and manages its own data infrastructure using suitable technologies or may reuse the shared infrastructure if it makes sense to them. The "Customer Relations" team may utilize Apache Kafka for real-time data streams from customer interactions, while the "Risk Management" team sets up cloud-based data lakes and data warehouses for storage and analysis.

![]() To facilitate data discovery and collaboration, the financial services company implements a centralized data catalog. This data catalog serves as a one-stop repository for all available data products, enabling different domain teams to discover and consume each other's data easily. For instance, the "Loan Management" team can use the "Credit Scoring Model" provided by the "Risk Management" domain to assess loan applications. Each domain team takes ownership of data quality and adheres to data governance policies. They implement data validation checks, data lineage tracking, and data monitoring to ensure the accuracy and reliability of their data products.

To facilitate data discovery and collaboration, the financial services company implements a centralized data catalog. This data catalog serves as a one-stop repository for all available data products, enabling different domain teams to discover and consume each other's data easily. For instance, the "Loan Management" team can use the "Credit Scoring Model" provided by the "Risk Management" domain to assess loan applications. Each domain team takes ownership of data quality and adheres to data governance policies. They implement data validation checks, data lineage tracking, and data monitoring to ensure the accuracy and reliability of their data products.

![]() With domain teams having autonomy over their data, they can experiment with advanced analytics techniques, machine learning models, and other innovative approaches specific to their domain needs. For instance, the "Investment Portfolio" team can experiment with reinforcement learning algorithms to optimize portfolio allocations.

With domain teams having autonomy over their data, they can experiment with advanced analytics techniques, machine learning models, and other innovative approaches specific to their domain needs. For instance, the "Investment Portfolio" team can experiment with reinforcement learning algorithms to optimize portfolio allocations.

In summary, the Data Mesh approach helps the financial services company to harness the power of its data, enabling data-driven decision-making, enhancing customer experiences, and staying competitive in a rapidly evolving industry.

Exploring Data Fabric

Gartner defines Data Fabric as “an emerging data management design for attaining flexible, reusable and augmented data integration pipelines, services and semantics. A Data Fabric supports both operational and analytics use cases delivered across multiple deployment and orchestration platforms and processes. Data fabrics support a combination of different data integration styles and leverage active metadata, knowledge graphs, semantics and ML to augment data integration design and delivery.”

Data fabric architecture is a more centralized and integration-focused approach to data management. It aims to create a unified and consistent data layer across the entire organization. The main characteristics of Data Fabric include:

- Integration-Centric: Data Fabric emphasizes data integration and consolidation. It seeks to provide a seamless and coherent data access layer that abstracts the underlying data sources and systems.

- Unified View: Data Fabric provides a unified view of data, hiding the complexities of underlying data sources. This allows data consumers to access and analyze data as if it were coming from a single source.

- Data Virtualization: Data Fabric often employs data virtualization techniques, where data is accessed and combined in real-time from various sources without physically moving or duplicating the data.

- Centralized Governance: Data Fabric typically enforces centralized data governance, data security, and data access controls to ensure compliance and data quality.

How Data Fabric Enables Data Integration and Accessibility

Data fabric enables data integration and accessibility by providing a unified and coherent data layer that abstracts the complexities of underlying data sources and systems. It acts as an intermediary layer between data consumers and data sources, making it easier to access and analyze data from diverse and distributed sources. Here's how Data Fabric achieves this:

- Data Virtualization: Data fabric often employs data virtualization techniques. Data virtualization allows data to be accessed, combined, and presented in real-time from various sources without physically moving or duplicating the data. This means that data consumers can access data from multiple sources as if it were coming from a single source, simplifying data integration.

- Unified View of Data: Data fabric provides a unified view of data from different systems and sources. Data from databases, data warehouses, cloud services, APIs, and other sources can be presented in a uniform manner, regardless of the differences in data structures and storage formats.

- Abstraction of Data Complexity: Data fabric abstracts the complexities of underlying data sources. It shields data consumers from having to understand the intricacies of data storage, data models, and access mechanisms used in individual data sources. Data fabric handles data transformations and mappings, making it easier for consumers to work with data.

- Data Access and Query Optimization: Data fabric optimizes data access and queries. It can intelligently route queries to the most appropriate data sources based on performance, location, and data availability, ensuring efficient data retrieval and analysis.

- Real-Time Data Integration: Data fabric enables real-time data integration, which is crucial in modern data-driven environments. Data changes from different sources can be reflected in the unified data layer almost instantly, allowing data consumers to access the most up-to-date information.

- Data Security and Governance: Data fabric enforces centralized data security and governance. It applies access controls, data masking, and encryption to ensure that only authorized users can access sensitive data. This centralization of security measures ensures consistent data protection across all data sources.

- Scalability and Flexibility: Data fabric is designed to handle large volumes of data from diverse sources. It is scalable and flexible enough to accommodate changes in data sources and requirements as the organization evolves.

Overall, Data Fabric reduces the complexity of dealing with multiple data sources and empowers users to focus on extracting insights and value from data without worrying about the underlying technical details.

Data Fabric in Practical Application

In a healthcare company, Data Fabric can be leveraged to address the challenges of managing and integrating diverse data sources, ensuring data security, and enabling real-time access to critical medical information. Let's explore a practical example of Data Fabric implementation in a healthcare company:

The healthcare company deals with various data sources, such as electronic health records (EHRs) from different hospitals and clinics, medical imaging data from diagnostic centers, patient-generated health data from wearables, and research data from clinical trials.

![]() Data fabric is used to integrate these disparate data sources into a unified data layer, ensuring interoperability between different systems. Physicians and medical staff require real-time access to patient information for making critical decisions. Data fabric enables seamless and secure access to patient data from EHRs, lab results, medical imaging, and other sources in real-time. This helps healthcare providers deliver more personalized and efficient care to patients.

Data fabric is used to integrate these disparate data sources into a unified data layer, ensuring interoperability between different systems. Physicians and medical staff require real-time access to patient information for making critical decisions. Data fabric enables seamless and secure access to patient data from EHRs, lab results, medical imaging, and other sources in real-time. This helps healthcare providers deliver more personalized and efficient care to patients.

![]() Further, healthcare data is highly sensitive and subject to strict privacy regulations (e.g., HIPAA in the United States). Data fabric enforces data security measures, including access controls, encryption, and data masking, to ensure that patient data remains secure and compliant with relevant regulations.

Further, healthcare data is highly sensitive and subject to strict privacy regulations (e.g., HIPAA in the United States). Data fabric enforces data security measures, including access controls, encryption, and data masking, to ensure that patient data remains secure and compliant with relevant regulations.

![]() Data fabric also enables healthcare analysts and data scientists to access and analyze large volumes of healthcare metadata and data efficiently. This includes data for clinical research, epidemiological studies, and healthcare operations optimization.

Data fabric also enables healthcare analysts and data scientists to access and analyze large volumes of healthcare metadata and data efficiently. This includes data for clinical research, epidemiological studies, and healthcare operations optimization.

![]() Additionally, as healthcare IoT devices become more prevalent, Data Fabric facilitates the integration of data from IoT-enabled medical devices, such as remote patient monitoring devices and smart medical equipment, into the overall healthcare data ecosystem. Many healthcare organizations are adopting cloud-based solutions for data storage and processing. Data fabric can seamlessly integrate data from on-premises systems with cloud-based platforms like AWS, Azure, or Google Cloud, ensuring a smooth and secure data transition.

Additionally, as healthcare IoT devices become more prevalent, Data Fabric facilitates the integration of data from IoT-enabled medical devices, such as remote patient monitoring devices and smart medical equipment, into the overall healthcare data ecosystem. Many healthcare organizations are adopting cloud-based solutions for data storage and processing. Data fabric can seamlessly integrate data from on-premises systems with cloud-based platforms like AWS, Azure, or Google Cloud, ensuring a smooth and secure data transition.

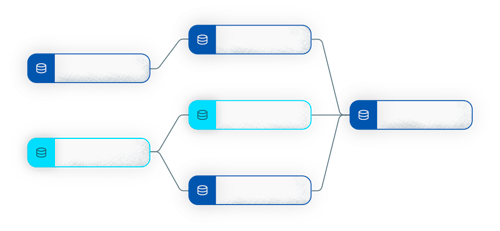

Unraveling Data Lineage

Traditionally, data lineage has been seen as a way of understanding how your data flows through all your processing systems—where the data comes from, where it’s flowing to, and what happens to it along the way. In reality, data lineage is so much more.

Why is this so important? A detailed dependency map is the core component of a modern data stack. Data lineage represents a detailed map, or data visualization, of all direct and indirect dependencies between the data entities in your environment.It allows you to gain complete visibility and a clear line of sight to uncover data blind spots throughout your data systems, while also helping ensure ethical, compliant, and efficient data management processes.

A detailed dependency map can tell you:

- Automate change management by understanding the impact of the changes to both Data Fabric and Data Mesh before you actually make them.

- How changing a bonus calculation algorithm in the sales data mart will affect your weekly financial forecast report

- Where data that is heavily regulated is being used and for what purpose

- What is the best subset of test cases that will cover the majority of data flow scenarios for your newly released pricing database app

- How to divide a data system into smaller chunks that can be migrated to the cloud independently without breaking other parts of the system There are endless opportunities when you tap into the full potential of your data.

When we look at this in the context of Data Mesh and Data Fabric, it is clear that data lineage can function within both architecture types. Especially in the context of data governance and compliance, data lineage makes sure that no matter your data architecture type or model, you can see where your data flows and that your data remains in compliance.

Key Considerations for Implementing Data Mesh, Data Fabric, and Data Lineage

Before implementing Data Mesh, Data Fabric, and data lineage, it’s important to understand the key differences:

Centralization vs. Decentralization: Data Fabric is more centralized, aiming to create a single, unified data layer, whereas Data Mesh is decentralized, distributing data ownership and management to domain teams.

- Focus: Data Fabric primarily focuses on data integration and providing a consistent view of data across the organization. On the other hand, Data Mesh focuses on domain-oriented data productization and autonomy.

- Data Productization: While Data Mesh treats data as a product and encourages domain teams to build and offer data products, Data Fabric abstracts the underlying data sources, making it easier for data consumers to access data without worrying about the source.

In summary, Data Mesh and Data Fabric are two different approaches to data management, each with its own strengths and suitable use cases. Data Mesh is more suited for organizations with diverse domains and a need for autonomy and faster data delivery, while Data Fabric is ideal for organizations or individual teams within larger organizations seeking a centralized, unified view of data and simplified data access.

If you’re considering Data Mesh vs. Data Fabric, remember that Data Fabric is a tool while Data Mesh is an implementation concept. You can have both - Fabric that supports Mesh – but it’s important to think about your organization’s culture surrounding data. If you already thrive with a decentralized data architecture, it may require more of a cultural push to move to a centralized data architecture like Data Fabric.

Take the Next Step with Manta

We’ve seen data architecture change in the last few years and it doesn’t seem to be stopping any time soon. Whether you have a Data Mesh or Data Fabric architecture model, data lineage is instrumental for getting maximum benefits of each when it comes to long-term utilization and management of both.

To learn more about Manta’s data lineage, download our Ultimate Guide to Data Lineage.

P.S. This post was written by a human!