Data Lineage Granularity

What are the levels or layers of lineage?

It's critical to understand how deep your lineage can go and where to dive in.

Depth of Data Lineage

Data lineage can be as automated or manual as needed, and has various levels of depth and breadth. To be able to maximize your efficiency, you may find it helpful to move between levels of granularity and to stack each layer to build a comprehensive overview of your data. But, you must first understand where to get started.

To help you understand these layers of lineage, we've compiled a few terms to keep in mind when considering a data lineage software. The following can be considered the depth of lineage:

Column-Level (Attribute Level) Lineage

This is the most granular level of lineage. Column-level lineage allows tracing individual properties or attributes and answers specific questions. For example, this level can be used to address questions such as “how has the profitability number been calculated?”

This level of lineage is the hardest to achieve simply due to scale. If every system has 100 datasets and each dataset has 100 attributes, then you’d be tracking 10k attributes (and their interactions) in total. Often, this is seen as too detailed but at the same time, only attribute level lineage enables automated use of lineage to answer questions about specific attributes.

Manta can help you tackle column-level lineage, saving you time and money.

Read More

Business Lineage

This is the easiest level of lineage to consume by non-technical users. This is often manually built, which is a costly and time consuming process that can quickly become outdated. It is best built by using automated technical lineage (column-level lineage) overlaid with a conceptual model to put lineage into simplified business terminology.

Read More

System or Application-Level Lineage

System or application-level lineage is often used by enterprise architects to understand the interconnections and dataflows between systems on a very high level, which is then delegated to their respective teams.

This is the level most companies start with when designing lineage manually. There are a handful of systems of interest at the beginning and using a manual approach makes it possible to cover the interconnections in a few days.

Read More

Dataset-Level Lineage

Dataset-level lineage goes one level deeper than application or system level lineage. It covers lineage on the level of entities that most teams work with (e.g. a Customer, Product). This is sometimes referred to as "conceptual" or "modeling" lineage.

This level actually gains importance with adoption of the Data Mesh concept, where Data Products are often groups of entity data, and understanding the origins of this data is often important for consumers. At the same time, understanding the usage of data products is important for their owners/producers.

Read More

Breadth of End-to-End Lineage

We hear the term end-to-end lineage often in the world of data management, but what does this term actually mean? The concept of end-to-end lineage is not very well defined and can mean slightly different things in different cases, and is often used as a marketing ploy. Be aware of this to make sure that you are comparing apples to apples. The overall idea of end-to-end is the breadth of coverage - to simply see lineage from source to target. Let’s discuss what it actually means:

Organization or Enterprise-Wide Lineage

This is the most holistic view to truly cover the organization with the lineage from the very original data sources to the end consumers of the data. Organization-wide lineage actually means to cover lineage across multiple departments, technologies for all the data that you have. This is ideal of breadth of the coverage, and Manta is uniquely equipped to deliver it.

Domain/Department-Wide Lineage

Domain or departmental lineage has two primary use cases. The first is column level detail that is used by the department for maintenance of the environment. The second use case is conceptual, system-level, or dataset detail that is used by other departments. Domain or department-wide lineage is often applied to the concept of Data Mesh and Data Products. You're covering lineage within your domain for your data products only. As part of the distributed concept of Data Mesh, your inputs are either data that your domain produces or other data products produced that you use. Your outputs are the data products that you publish; and along with your data products, you also publish the metadata including the lineage.

This lineage can be limiting. This means that you do not necessarily go against the very source of the data or to understand all the end-users of the data that you produce. You simply define your inputs and outputs and trace what happens to the data in between. Essentially, you are covering what happens in your department. This breadth of lineage is very useful for optimizing processes and increasing efficiency within the department or team.

Use Case Specific Lineage

When lineage is needed for a specific use case, that use case usually defines the scope, or the breadth that needs to be covered -- whether it is true end-to-end or what the use case actually considers as the “source” and “end point” for the data pipeline visibility.

We often see that this is a slice of organization-wide lineage for a specific data domain, data set, or just a few common data elements, or CDEs. When you get to the specific CDE-level that goes across the whole organization, it gets tricky to pick the right approach. Selecting a full automation that captures everything may be like using a sledgehammer to crack a nut, especially if you do not ever plan to scale up and cover more. On the other hand, starting with a manual approach may get you results quickly for a few CDEs but will not scale if you plan to get much more coverage in the future.

Application-Wide Lineage (DWH, BI)

Application-wide lineage is usually what individual teams who manage the application need to optimize their work and reduce risk of introducing unexpected errors.

For example, data warehouses that contain a tremendous amount of business logic for various reporting that has been needed in many organizations for over 30 years. Making changes, optimizing, modernizing or migrating such applications efficiently and in a controlled, low-risk manner, requires untangling the 30-years of complexity. Based on the need, this often means to get very detailed and accurate lineage.

Looking into the future while learning from the past, when building a new data warehouse, it is good to have the application transparency and manageability in mind, and to embed metadata and lineage into the process from the very beginning as the requirements for more transparency are not going away; they are only getting more important. Metadata-driven approach can be one of the ways to achieve this.

Market Guide

Ataccama 2022 State of

Data Quality Report

Data Lineage Approach

Going one step further, there are various approaches to lineage to meet various use cases, databases, and goals.

Production Code-Based Lineage

Production code-based lineage presents the most complete lineage including all dependencies. This is helpful for root-cause and impact analysis, as well as transparency of your environment -- especially in situations when you need to understand all possible impacts to adjust. Imagine that you are dropping a column from a table. You need to know where that data is used and all branches of code are critical. Even if they are never run, are run infrequently, or if it is an edge somewhere deep in an if-clause, these need to be captured and analyzed. If they're not, it may cause issues when deploying this code. Or, in the worse case, can cause and issue years later when the condition is met, leaving your team scrambling to find out why the code failed and try to fix it quickly as an incident.

Read More

Data-Record Lineage (Traceability)

Often referred to as traceability, this is a critical approach for compliance where the question is really about a specific data record and its movement in the environment. This type of lineage is important when it comes to financial services reporting, healthcare reporting, or any other type of reporting where the origin of the data point is critical.

In this type of lineage, the goal is to track or trace every single number back to its point of origin, but it goes beyond what we traditionally think of in data lineage. In other words, data lineage can trace the documentation of the data life cycle, and data traceability is the process of evaluating that data and how it follows the rules of its cycle and proper usage.

Read More

Runtime Lineage (Observability)

Runtime lineage captures what has been actually run and is often used as more of an observability tool. It is extremely popular with databases and data integration tools in particular. With databases, the query log typically captures individual executed queries with some basic context. It also captures queries regardless of who or which tool executed them, giving a solid overview of what is being run against the database. This is very useful to see actual individual queries including numbers of rows transferred, etc. However, it misses significant context, e.g. when the query is executed from within a stored procedure, this piece of information is missing. Similarly when a query is executed from a reporting or data integration tool, the info about a particular job is missing. Runtime lineage is a critical element in root-cause analysis, or finding where an issue originated in a piece of data.

Read More

Design-Time Lineage (Modeling Tools)

Data environments are constantly changing. Sometimes, despite your best efforts, those changes can have a negative impact that isn't always apparent right away. For example, let's say you're looking at creating a new app. You will need to know how one small change in the code will create a ripple effect down the line before you actually implement that change. You might think that runtime lineage can solve this problem, but that only looks at the environment currently running (root cause analysis)-- not a future model. You need impact analysis, which is where design-time lineage comes in.

Modeling is an architectural, metadata driven approach that allows you to observe and manipulate the environment before it actually exists without effecting systems or data structures currently active. This allows you to move beyond data structures and include the code that moves the data around. Comparing the design-time and code-based on run-time lineage against each other gives more complete insight into planning. Ultimately, this combination of root cause and impact analyses can help you reduce unexpected out-of-process changes that would cause issues later.

Read More

The Caveat

Runtime lineage isn't always enough on its own.

Design and run-time are good for different uses. However, there’s a large set of use cases where run-time lineage is not good enough – yet you can’t do any sort of end-to-end or application to application impact analysis with run-time lineage.

The challenge is that you can’t observe something that isn’t running. But that doesn’t mean that something that isn’t running can’t, or shouldn’t, have lineage. If the data is connected to something that doesn’t run yet but will run in the future, you’ll need to use it in your lineage discussions and planning. But, you may think you can’t simply because it's not running yet! In design-time lineage, you’re observing but you don’t see the syntax of the tool that is doing the writing.

Find the Right Level of Lineage for Your Organization

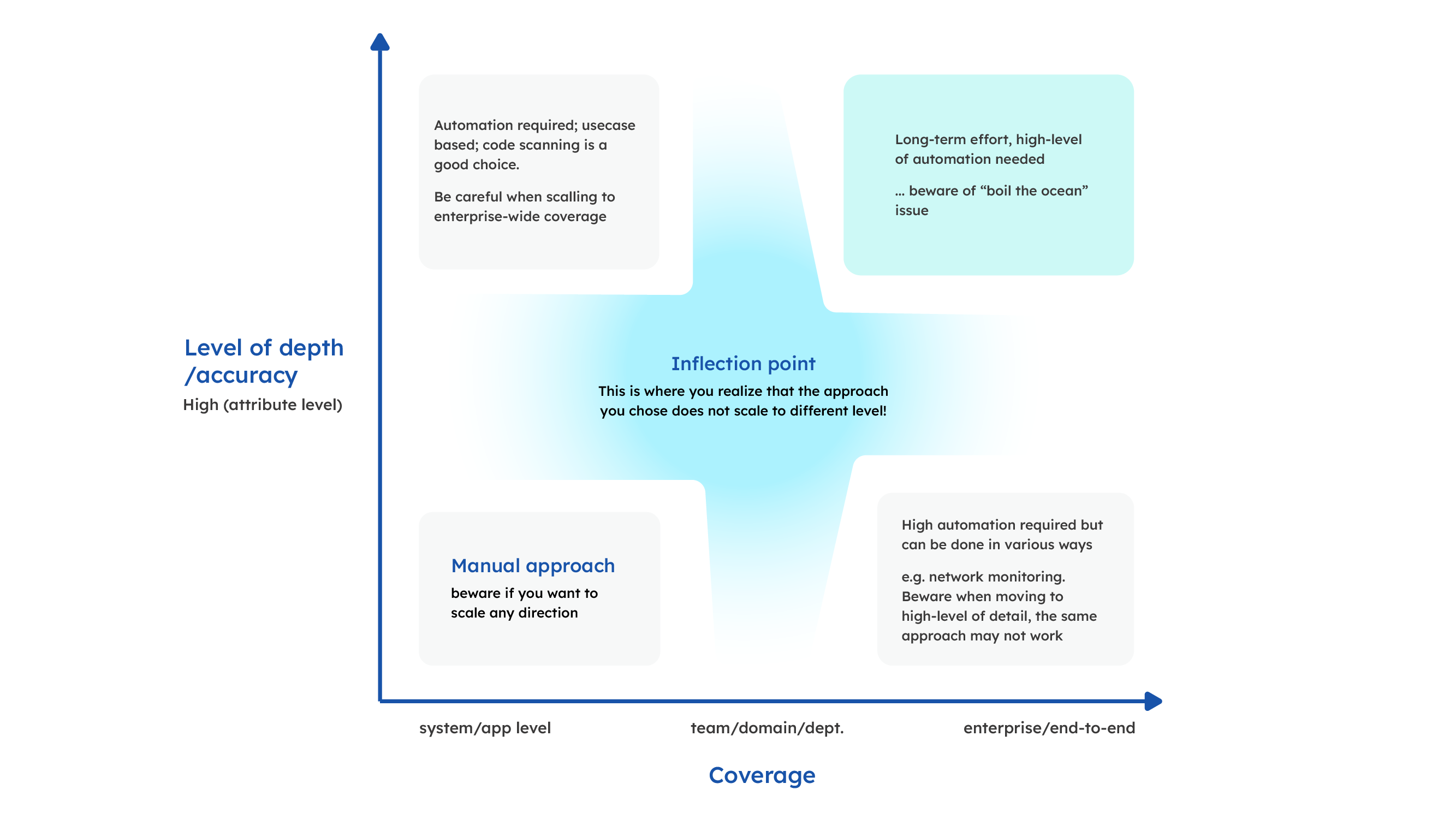

Linking depth, breadth, and approach of various granularities of lineage is not an easy task to do.

Think of it as creating a map of your road network of the US today - now that you have all the roads built, how do you actually create a map? Do you start with coast-to-coast highways, but then are lost on the “last-mile” problem? Or do you start building a detailed map of a town, but miss the big picture of the country? Or do you do it completely differently, e.g. by monitoring traffic and speed using cellphone positions? Different approaches provide different coverage, levels of accuracy and detail. Data lineage is similar - different approaches are suited for different use cases and to address different needs.

Steps to Find the Granularity of Lineage You Need

Step 1

Consider the project or outcome you want to use the lineage to achieve. This gives you level of detail and coverage needed from a depth and breadth perspective.

Step 2

Choose the approach to collect it.

Please note that the approach may be different for the environment that you already have built and for the environment that you are going to build. With the latter, you can design the environment with lineage in mind, e.g. developers can use some tagging libraries to generate the lineage info both design and runtime.

Step 3

Consider scalability.

We often see two approaches that are destined to fail: (1) start manually with low-coverage low-detail and then try to cover end-to-end space in a higher-level of detail by distributing the responsibility for building it to individual teams. Or (2) we see a "boil the ocean" approach without milestones or smaller goals where lineage can be used and proven. Then, when you start scaling you realize the approach that was once optimal does not actually scale well and you need to change.

We recommend that you plan what you want to achieve in the long term. Consider which level(s) of detail will be needed (bearing in mind that you can always aggregate detailed lineage to high-level in an automated fashion, but it does not work the other way around); and use an agile/project based approach. Do not try to boil the ocean. Instead, find where lineage of particular level of detail and accuracy is needed and build it using the right approach to fit into the long-term goal.

Let’s Talk

Ready to dive into the sea of data? Schedule a demo with our team to see how we can help.